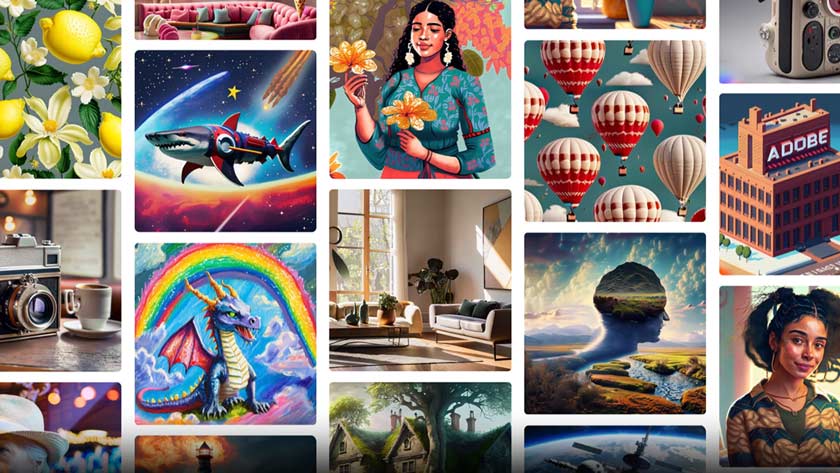

Adobe has recently introduced a new generative AI model called Firefly, which aims to assist users in creating unique and creative content simply by providing a prompt. Unlike other AI Art Generators, such as DALL-E and Midjourney, Firefly offers a more extensive range of features. While still in development, the beta program offers “Text to image” and “Text effects” capabilities to users.

There are several creative, generative AI models in Firefly, including Text to image, Text effects, and Recolor vectors. Adobe is also developing several new AI models for various use cases, such as Inpainting, Personalized results, Text vector, extended images, 3D-to-image, Text the pattern, Text brush, Sketch images, and Text Template. With these models, users can use everyday language to create custom vectors, brushes, or textures and generate context-aware images that fit into artwork. Additionally, the AI can instantly generate a new look for video editing based on natural language descriptions of mood or atmosphere.

Adobe has trained the model on a dataset of Adobe Stock library, openly licensed work, and public domain content. Adobe Firefly is available only on the website as part of the beta program. It does not include copies of customer content or Creative Cloud subscribers’ content. Still, Adobe plans to integrate all of its features into its family of creative apps, including Photoshop, Premiere Pro, and Illustrator.

Adobe’s approach to AI is a collaborative tool that enhances creative work rather than replacing artists entirely. It’s also commendable that Adobe is transparent about the data used to train the model, something that many companies avoid discussing. Users can sign up for Adobe Firefly by visiting the website and requesting access.